Introduction: Rust Rises in Deep Learning with the Burn Framework

The deep learning landscape is in constant evolution, with a growing emphasis on performance, flexibility, and deployment across diverse hardware. The Rust programming language has emerged as a compelling choice for building high-performance, reliable software. Its inherent safety, efficient memory management, and concurrency support make it perfectly suited for the computationally intensive nature of machine learning. The Burn framework is a significant development, offering a novel approach to deep learning that leverages Rust’s strengths to empower machine learning engineers and researchers in both industry and academia. Unlike frameworks that are simply adaptations of existing Python-based solutions, Burn is architected from the ground up with a fresh perspective, addressing the limitations of the current deep learning ecosystem. This foundational difference indicates that Burn is not merely replicating existing functionality but is innovating to provide a uniquely versatile and efficient platform for developing and deploying intelligent systems.

To effectively explore Burn’s capabilities, it is crucial to understand its terminology, specifically “tch,” “burn,” “burn-tch,” and “burn-train.” Burn refers to the core Burn framework, a comprehensive, dynamically designed deep learning framework built in Rust. Its primary design objectives are extreme adaptability, computational efficiency, and cross-platform compatibility. Tch alludes to the tch-rs crate, which is a set of Rust bindings for LibTorch. LibTorch is the foundational C++ library underpinning PyTorch, a widely adopted open-source machine learning framework.

Within the Burn ecosystem, burn-tch denotes a specific crate (a Rust package). This crate functions as the LibTorch backend for Burn, utilizing the tch-rs bindings. This integration allows developers to execute Burn models using PyTorch’s highly optimized C++ kernels (via LibTorch) across various hardware: CPUs, NVIDIA GPUs (with CUDA), and Apple GPUs (using Metal). Finally, burn-train refers to the training functionalities within Burn. This encompasses the modules and tools specifically engineered for training deep learning models. A dedicated burn-train crate exists, further supporting this. These terms represent distinct yet interconnected components within the Rust deep learning ecosystem, with Burn at the center, leveraging PyTorch’s backend via burn-tch and providing comprehensive training tools within burn-train.

Burn Framework: Core Concepts and Architecture overview

The Burn framework is engineered with a strong emphasis on three core principles: performance, flexibility, and portability, covering both training and inference. To achieve this, Burn incorporates key architectural features. Burn boasts exceptionally fast performance through various optimizations. It is designed for maximum composability, giving developers unparalleled flexibility to implement ambitious modeling ideas without sacrificing reliability or efficiency. Burn offers unmatched portability by abstracting the backend, enabling deployment across a wide range of hardware: laptops with GPUs, large-scale cloud training clusters, and resource-constrained embedded devices. Reliability is a cornerstone, leveraging Rust’s memory safety and concurrency to enhance performance while ensuring security and stability.

Burn provides an intuitive, clearly documented modeling API, simplifying neural network definition. It incorporates a dynamic computational graph and a custom JIT compiler, enhancing flexibility and efficiency by optimizing computations at runtime. Burn supports multiple backends (CPUs and GPUs), letting developers choose the best hardware. It also includes comprehensive support for training: logging, metric tracking, and checkpoint saving – a true “Batteries Included” approach.

At a granular level, Burn introduces a unique architecture centered around tensor operation streams. These streams are meticulously optimized at runtime and automatically tuned for the specific hardware by an integrated JIT compiler. This leverages Rust’s ownership rules to track tensor usage, tied to Rust’s robust type system. This enables highly dynamic models with performance comparable to optimized static graph frameworks. A key aspect is Burn’s backend-agnostic nature. It supports WGPU (using WebGPU for cross-platform GPU support), Candle, LibTorch (through burn-tch), and NdArray. This allows backend composition, augmenting base backends with features like automatic differentiation and kernel fusion.

To enhance performance, Burn uses automatic kernel fusion, dynamically creating custom kernels to minimize data movement – crucial when memory transfer is a bottleneck. Backends developed by the Burn team use an asynchronous execution style. This enables optimizations like kernel fusion and ensures the framework doesn’t block model computations, minimizing overhead. Intense model computations also don’t interfere with framework responsiveness. Burn emphasizes thread safety via Rust’s ownership. Each module owns its weights, enabling sending modules to different threads for gradient computation, then aggregating in the main thread, facilitating multi-device training.

burn-tch: Bridging the Gap with PyTorch

Technical Implementation and Backend Support

The burn-tch crate is vital, bridging Burn and the robust PyTorch library. Technically, burn-tch provides a Torch backend for Burn using the tch-rs crate. Tch-rs offers a safe, idiomatic Rust interface to PyTorch’s C++ API (LibTorch). This lets Rust developers using Burn leverage PyTorch’s computational capabilities without direct Python interaction or C++ interop complexities.

The burn-tch backend supports CPUs (with multithreading), NVIDIA GPUs (via CUDA), and Apple GPUs (via MPS). As a Burn crate, burn-tch benefits from Burn’s architectural flexibility. Developers can extend backend functionalities. For example, custom operations or hand-optimized kernels can be implemented for burn-tch to enhance performance for specific tasks or hardware. This customizability underscores Burn’s commitment to a versatile, adaptable platform.

Installation and Configuration

Setting up burn-tch requires attention to its dependencies, primarily LibTorch. The C++ PyTorch library (LibTorch) must be accessible. By default, adding burn-tch as a dependency installs LibTorch version 2.2.0 (CPU distribution), as required by tch-rs.

For GPU acceleration via CUDA, additional configuration is needed. The TORCH_CUDA_VERSION environment variable must be set before burn-tch is built. Ensure the NVIDIA driver is compatible with the chosen CUDA version. A full CUDA Toolkit installation isn’t strictly required; LibTorch includes CUDA runtime libraries.

For a different LibTorch distribution (specific CUDA version or build), manual download and configuration are needed. Download the desired LibTorch distribution and set environment variables. LIBTORCH should be the absolute path to the LibTorch installation, and the LibTorch library directory should be added to the system’s library path (LD_LIBRARY_PATH on Linux, DYLD_LIBRARY_PATH on macOS, Path on Windows). Once configured, burn-tch can be built and used. Documentation for burn-tch and tch-rs provides detailed instructions. Validation is often done by running example programs using CPU, CUDA, or MPS backends.

Deep Dive into burn-train: Empowering the Training Workflow

Customizable Training Loops

Burn is designed for the entire deep learning lifecycle: development, training, and deployment. A key aspect is flexibility in defining and executing training. While Burn provides a high-level Learner API, it empowers users to implement custom training loops. This is valuable when specific requirements exist, like logging loss values with precise timestamps to a time-series database.

Burn’s training uses TrainStep and ValidStep traits (in burn::train). These mandate a step method for training and validation. They are generic over input/output types, allowing flexibility in data processing. When implementing these, concrete input (e.g., a data batch) and output (e.g., loss, predictions) types must be specified.

Computing gradients involves invoking backward() on the loss. Unlike PyTorch, backward() doesn’t store gradients with parameters. It returns them as a separate structure. A parameter’s gradient is obtained using grad, taking the parameter tensor and gradients structure as input. This explicit gradient handling offers greater control and is useful for debugging or advanced optimization. When using Burn’s Learner API and built-in optimizers, this is handled internally.

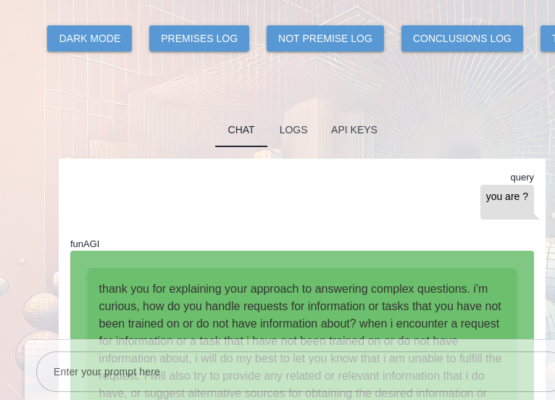

Ergonomic Training Dashboard

Burn provides an integrated, user-friendly dashboard for training monitoring. This offers real-time visualization of key metrics, letting users observe model progress. The dashboard enables analysis of metric progression or recent history, providing insights. A practical feature is the ability to break out of training without crashes, ensuring checkpoints are written or code completes execution. A Text UI with progress bar and plots is available via the tui feature flag, offering terminal-based monitoring. These features contribute to an intuitive, manageable training experience.

Metrics and Monitoring

Burn has comprehensive tools for logging, tracking metrics, and managing checkpoints – a “Batteries Included” approach. During training, the model’s forward pass output is a structured item understood by the training machinery. This output struct is crucial for applying the optimizer and holds information for calculating metrics.

Burn provides ClassificationOutput and RegressionOutput for common metrics. These implement traits for seamless use with Burn’s metrics. Developers can configure the learner to track accuracy and loss on training and validation datasets. Beyond standard metrics, Burn offers system-level monitoring (CPU/GPU usage) via the metrics feature flag. This provides a holistic view. Built-in metric types, custom metric definition via the output struct, and system monitoring tools ensure developers have the insights to effectively train and evaluate models.

Use Cases of Burn and burn-tch in Action

Burn, with burn-tch, is versatile for various deep learning applications. Its portability allows training and deployment across environments: mobile, web browsers, desktops, embedded systems, and GPU clusters. Burn simplifies the transition from training to deployment, often eliminating code modifications typically needed in PyTorch.

Burn’s interoperability is enhanced by ONNX support, importing models from TensorFlow and PyTorch. Burn-tch facilitates direct PyTorch weight import. This lets users with PyTorch models integrate them into Burn.

The Burn project provides illustrative examples: MNIST training/inference (CPU/GPU, web demos), image classification, text classification (transformers), text generation, and regression. Burn is well-suited for specific domains. Its efficiency and portability are useful for IoT, especially industrial contexts with resource constraints and real-time needs. Efficient memory usage and native performance make it excellent for edge devices. Exploration into using Burn as a web application backend is ongoing. These use cases highlight Burn’s flexibility and broad applicability.

Advantages of Choosing Burn and burn-tch: Unleash the Power!

Choosing Burn, especially with burn-tch, offers compelling advantages. Burn provides flexibility, high performance, and exceptional portability. It is engineered for blazing-fast execution. Burn’s architecture maximizes composability, giving developers unparalleled flexibility. Its portability ensures deployment across diverse hardware.

Leveraging Rust, Burn offers enhanced reliability through memory safety and concurrency, preventing pitfalls like memory leaks and race conditions. Burn provides an intuitive, well-documented modeling API. A dynamic computational graph and JIT compiler contribute to efficiency.

Burn incorporates automatic kernel fusion, optimizing execution. Its asynchronous model enhances performance. Thread safety, via Rust’s ownership, enables multi-device training. Burn features intelligent memory management.

Burn-tch lets developers tap into the PyTorch ecosystem while writing high-performance Rust. It simplifies Rust/Python interoperability, integrating with PyTorch workflows or using pre-trained models. Burn’s native Rust performance eliminates complex C++ bindings, reducing deployment complexity.

| Feature | Burn (with burn-tch) | PyTorch | TensorFlow |

|---|---|---|---|

| Language | Rust | Python (with C++ backend) | Python (with C++ backend) |

| Backend Flexibility | High (Multiple native backends + LibTorch via burn-tch) | High (CPU, CUDA, MPS, others) | High (CPU, GPU, TPU) |

| Performance Focus | Very High (Native Rust, JIT, Kernel Fusion, Asynchronous Execution) | High (Optimized C++ backend) | High (Optimized C++ backend, Graph optimization) |

| Portability | Excellent (Abstracted backend, targets diverse hardware) | Good (Requires platform-specific builds) | Good (TensorFlow Lite for mobile/embedded) |

| Safety Features | Excellent (Rust’s memory and thread safety) | Limited (Relies on Python and C++) | Limited (Relies on Python and C++) |

| Ease of Deployment | High (Native Rust binaries, simplified transition from training) | Moderate (Can require code changes) | Moderate (TensorFlow Serving, TensorFlow Lite) |

| Ecosystem Integration | Good (Growing Rust ML ecosystem, direct PyTorch weight import) | Excellent (Large and mature ecosystem) | Excellent (Large and mature ecosystem, TensorFlow.js) |

| Training Dashboard | Built-in ergonomic dashboard | Third-party tools (e.g., TensorBoard) | TensorBoard integrated |

| Model Import | ONNX, PyTorch (via burn-tch) | ONNX, limited support for other formats | ONNX, Keras models |

This comparison highlights Burn’s unique position as a Rust-native framework leveraging PyTorch’s backend, offering performance, safety, and portability.

Ignite the Future of Deep Learning with Burn!

Burn is a giant leap forward, integrating deep learning into the Rust ecosystem. Its design, rooted in Rust’s safety, performance, and developer ergonomics, positions it as a promising platform. As Rust gains traction, Burn’s role as a native deep learning framework will become increasingly important, especially where high performance, reliability, and efficiency are crucial.

The burn-tch backend enhances Burn, bridging it to the PyTorch ecosystem. This lets developers harness PyTorch’s power while benefiting from Rust’s safety and Burn’s architecture. Rust’s safety, performance, and Burn’s abstractions create a compelling foundation for next-generation deep learning, especially where Python-based frameworks fall short.

While Burn is younger than PyTorch and TensorFlow, its active development and vibrant community suggest a promising trajectory. As Burn matures, it has the potential to become a cornerstone framework for deep learning in Rust, offering a powerful, safe alternative.

Ready to experience the future of deep learning? Dive into Burn and unleash the power of Rust for your next AI breakthrough.